Ellen Hazelkorn, Joint Managing Partner, BH Associates Education Consultants

Perhaps it’s hard to imagine, but there was a time when university rankings did not exist. Although the US News & World Report Best Colleges Rankings [2] are now an entrenched part of the college and university experience in the United States, they have been around just since the 1980s. Until recently, few people in countries outside of the U.S. had even heard of university rankings. Today, they have proliferated. Rankings now exist in over 40 countries, and global rankings are increasing rapidly.

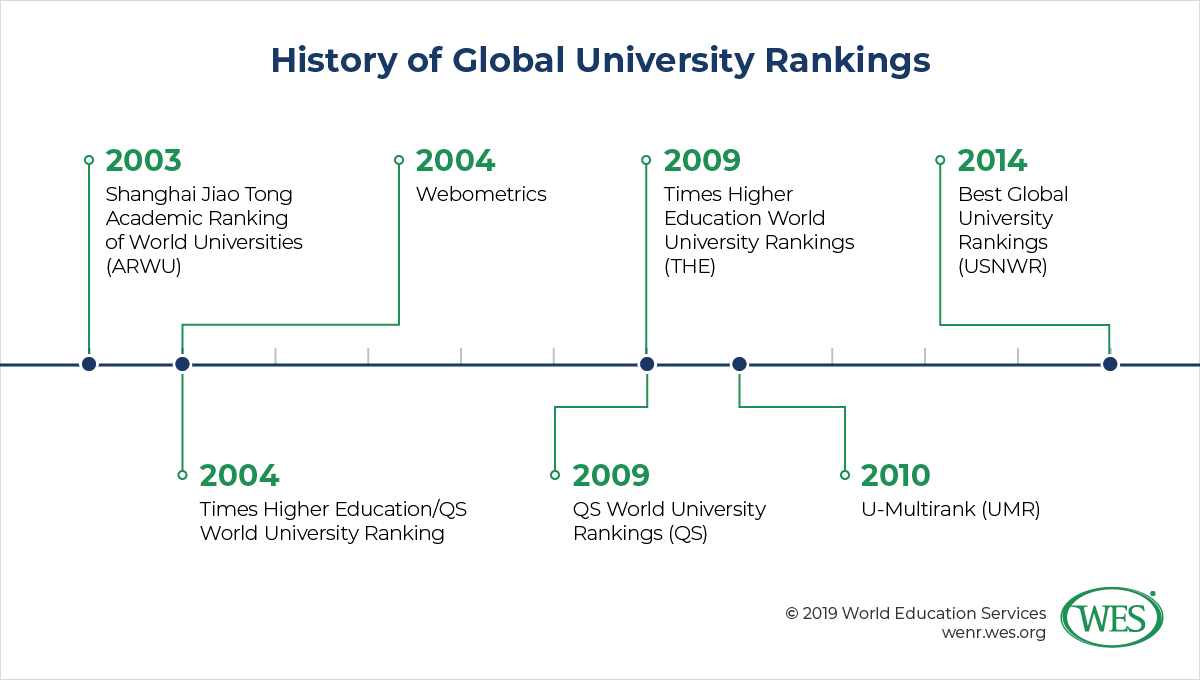

There are now nearly 200 different types of rankings that compare higher education institutions (HEIs) in almost every part of the world. The overwhelming majority are developed by commercial interests, often media companies, with only a small percentage developed by government or public organizations. U-Multirank (UMR), a multidimensional university ranking system developed by the European Union in 2010, is an exception.

The popularity of rankings is growing around the world, and so is their influence. They are widely used by students and their parents, by governments and policy makers, and by colleges and universities.

But do rankings measure quality? Do rankings measure what people think they measure? And can rankings tell us which universities are the “best”?

The Appeal of Rankings

University rankings are popular because they are simple to understand—also a main criticism. There are national, world region, and global level rankings, each of which may rank HEIs at the organizational level, by field of science (natural science, mathematics, engineering, computer science, social sciences), by discipline or profession (business, law, medicine, graduate schools), by engagement with industry or society, or by some other criteria, such as student facilities, safety on campus, or campus life. Global rankings commonly measure institutional performance by focusing on research quality and output and reputation.

Rankings have gained influence over the years because they have the appearance of scientific objectivity. They compare colleges and universities using a variety of criteria or indicators, which are each given a different weight or value. The final score is aggregated to a single digit to create an ordinal ranking. The lower the number, the better the score.

There is no such thing as an objective ranking, however, because there are different views about what is important. The choice of indicators and weightings reflects the priorities or value judgments of those producing the ranking. Because it is difficult to identify direct measurements, the rankings rely on proxies or equivalences. Likewise, it is difficult to identify the most appropriate internationally comparable data. These issues raise questions about the accuracy and meaning of rankings as a measure of quality, performance, and productivity. In a nutshell, there is no agreed-upon international definition of quality [4].

There is also considerable criticism of the methodologies rankings use, their choice of indicators, and the reliability of their data. In addition, producing annual comparisons is unwarranted because institutions do not, and cannot, change significantly from year to year.

What do rankings measure?

Although rankings look similar, each one measures different aspects of an HEI, depending on the choice and definition of each indicator, the weightings assigned, and the data source. Global rankings depend on internationally comparative data, but these are imperfect. National contexts resist attempts to make simple comparisons, and there is a significant lack of consistency in the data definition, sets, collection, and reporting.

As a result, global rankings primarily measure research, which benefits research in the biological and medical sciences because those disciplines are most comprehensively recorded in international bibliometric databases. This bias is further reinforced by ARWU, which singles out research published in Nature and Science, and winners of Nobel and Fields [5] prizes, for special attention.

Most rankings also measure the reputation of a university as valued by its faculty peers, students, or business/employers. However, reputational surveys tend to be subjective, self-referential, and self-perpetuating. In other words, it’s rare for any one respondent to know more than a handful of universities. Thus the colleges and universities identified tend to be the ones most often mentioned.

Student surveys can also be problematic because students are prone to applaud their own institution since its status has knock-on implications for their own career prospects.

In contrast to the big three rankings (ARWU, Times Higher Education, and QS), U-Multirank is a multidimensional comparison that emphasizes user customization. It does not create a single score or profile, rather it encourages comparability of similar types of universities. It measures performance under educational profile, student profile, research involvement, knowledge exchange, international orientation, and regional engagement. Taking this more nuanced approach, U-Multirank is more sophisticated than other rankings—but its complexity may be less appealing than the apparent simplicity of other rankings.

Teaching and Learning

Many rankings purport to measure education quality. However, because this area is extremely complex there is a tendency to choose proxies, such as the faculty-student ratio or the ratio of domestic to international students or of research income to faculty. But there is little evidence that these indicators provide meaningful information. Faculty-student ratio says more about resources than it says about the quality of teaching and learning [6].

Employability is another area of increasing significance, but employment data often concentrate on the first six to nine months post-graduation, and do not distinguish between graduate-level jobs or underemployment. There is a growing focus on graduate salaries, but this too can be highly misleading without accounting for regional location and other considerations.

Without question, teaching and learning make up the fundamental mission of higher education. With few exceptions, undergraduates comprise the majority of students enrolled worldwide. However, understanding how and what students learn and how they change as a result of academic exposure without taking into account their prior experience—their pre-entry social capital—remains a major challenge.

This debate takes different forms in each country, but emphasis is increasingly being placed on learning outcomes, graduate attributes, life-sustaining skills, and what HEIs are contributing to all of those—or not. The focus is on the gains of learning rather than the status or reputation of the institution. Ultimately, we are mistaken if we think that we can measure teaching, at scale, distinct from the outcomes of learning.

Societal Impact

There has been growing public interest in the outcomes and impact of higher education and commitment to the 17 UN Sustainable Development Goals [7] (SDGs). In response, rankings have also begun to measure universities’ societal engagement.

Both the THE and QS rankings have historically measured societal engagement in terms of research collaboration or third-party or industry-earned income. In April 2019, THE launched its University Impact Ranking [8] which measures activity aligned with the SDGs. QS includes social responsibility within its QS Stars Ranking [9]. While these efforts are admirable, the rankings are essentially measures of research and investment, respectively.

In contrast, U-Multirank has always used a broader range of indicators. Regional engagement is measured as student internships, graduate employment, and engagement with regional organizations, while knowledge transfer is measured as collaboration with industry, patents or spinoffs, and co-publications with industry.

Which universities are ‘best’?

Despite a common nomenclature, rankings differ from each other. “Which university is best” can be asked and answered differently depending upon the ranking and the person asking the question. This variability presents a problem for those who think the results are directly comparable. They are not.

Are the best universities—

- Those which best match the criteria established by the different rankings, or those that help the majority of students earn credentials for a sustainable life and employment?

- Those which choose indicators which best align with society’s social and economic objectives and values, or those which adopt indicators chosen by commercial organizations for their own purposes?

The aim of being “world-class” has too often become the objective of too many universities and governments. A world class designation is usually based on rankings within the top 100. But as we’ve seen, rankings do not measure what we think they measure. They do not measure teaching and learning, the quality of the student experience, or the value of research for learning or for society.

Essentially rankings measure the outcomes of historical competitive advantage. Elite universities and nations benefit from accumulated public or private wealth and investment over decades, if not centuries. They also benefit from attracting wealthy, high achieving students who graduate on time and have successful careers. Institutional reputation is too easily conflated with quality, and because reputation takes time to develop, this easy conflation advantages older, established institutions. New universities and those serving non-traditional or adult learners are ignored. All these factors are reproduced in the indicators which rankings use and reinforce.

Measuring and comparing quality, performance, and productivity in higher education is an indispensable strategic tool for policy making and institutional leadership and for the public, including students. However, to be effective, it is important that we ask: Are we measuring what’s meaningful—or are we simply measuring those areas for which data are available? To paraphrase Einstein, global rankings focus on what is easily measured rather than measuring what counts.

Ellen Hazelkorn is joint managing partner at BH Associates education consultants (www.bhassociates.eu [10]). She is Professor Emerita, Technological University Dublin (Ireland), and Joint Editor of Policy Reviews in Higher Education. She is a NAFSA Senior Scholar and was awarded the EAIE Tony Adams Award for Excellence in Research, 2018. Ellen is a member of the Quality Board for Higher Education in Ireland, and previously served as policy advisor to the Higher Education Authority in Ireland.

Ellen is internationally recognised for her writings and analysis of rankings. Publications include: Rankings and the Reshaping of Higher Education [11]: The Battle for World-Class Excellence, 2nd ed. (Palgrave, 2015); editor, Global Rankings and the Geopolitics of Higher Education [12] (Routledge 2016); co-editor, Rankings and Accountability in Higher Education: Uses and Misuses [13] (UNESCO, 2013); co-author, Rankings in Institutional Strategies and Processes: impact or illusion? [14] (EUA, 2014). She is co-editor of Research Handbook on Quality, Performance and Accountability in Higher Education [15] (Edward Elgar 2018). Research Handbook on University Rankings: History, Methodology, Influence and Impact (Edward Elgar 2021) is forthcoming.